本文主要记录华硕的GPU本(RTX2070)如何Ubuntu20.04 + Nvidia显卡驱动 + CUDA11.8 + cuDNN8.7,并验证GPU CUDA生效的过程。

一、安装Ubuntu20.04

1.下载Ubuntu20.4的iso镜像文件

自己去官网下载:https://www.ubuntu.com/download/desktop

2.把ISO镜像烧录到U盘里

推荐使用balenaEtcher,它可以在 Linux、Windows 和 Mac OS 上运行。

3.准备Ubuntu磁盘

磁盘管理,选中c盘(默认只有一个c盘),右键压缩卷,填写要从c盘中分割出来的磁盘大小,这里填的512000M即500G给ubuntu。注意不要格式化

4.重启按F2修改Bois设置

4.1 Secure Boot 设置成Disable

否则会黑屏

4.2 Boot启动顺序改为U盘在win10前边

4.3 STAT Model改为AHCI

否则ubuntu分区时会找不到磁盘;但是改为AHCI会导致进不了win10,暂时没找到更好的办法

5.安装Ubuntu

如果遇到No EFI System Partition was found.this system will likely….. Go back ….时,需要新建一个用于EFI的分区,给100M就可以。

二、安装GPU驱动

1 准备工作

sudo apt-get install vim-gtk openssh-server linux-headers-$(uname -r)

sudo vim /etc/default/grub

GRUB_GFXMODE=1920x1080

sudo update-grub

sudo apt-get update

sudo apt-get upgrade2 禁用nouveau驱动

sudo vim /etc/modprobe.d/blacklist.conf #末尾添加如下几行:

blacklist vga16fb

blacklist nouveau

blacklist rivafb

blacklist rivatv

blacklist nvidiafb

blacklist lbm-nouveau

options nouveau modeset=0

alias nouveau off

alias lbm-nouveau off

sudo update-initramfs -u

reboot

lsmod | grep nouveau #查不到表示nouveau已被屏蔽3 安装显卡RTX2070驱动

sudo apt-get remove --purge nvidia* #安装之前先卸载已经存在的驱动版本

sudo apt-get install freeglut3-dev build-essential libx11-dev libxmu-dev libxi-dev libgl1-mesa-glx libglu1-mesa libglu1-mesa-dev #依赖项

sudo add-apt-repository ppa:graphics-drivers/ppa #添加Graphic Drivers PPA

sudo apt-get update

ubuntu-drivers devices #寻找合适的驱动版本

sudo apt-get install nvidia-driver-525 #安装525版本驱动

cat /proc/driver/nvidia/version

NVRM version: NVIDIA UNIX x86_64 Kernel Module 525.147.05 Wed Oct 25 22:27:35 UTC 2023

GCC version: gcc version 9.4.0 (Ubuntu 9.4.0-1ubuntu1~20.04.2)

# 也可以进入nvidia官网 https://www.geforce.cn/drivers下载对应rtx2070显卡的驱动程序,下载后的文件格式为run

# sudo sh ./NVIDIA-Linux-x86_64-525.147.run –no-opengl-files #一路回车即可

reboot #重启

nvidia-smi #检查显卡驱动是否正常三、安装CUDA、cuDNN

1. 确认匹配的CUDA、cuDNN版本

1.1 确认操作系统、GCC版本

$ cat /etc/issue

Ubuntu 20.04.6 LTS

$ gcc --version

gcc (Ubuntu 9.4.0-1ubuntu1~20.04.2) 9.4.01.2 确认PyTorch/Tensorflow与CUDA版本的关系

- 截至到今天最新的pytorch版本v2.1.1,支持CUDA v12.1和v11.8版本。

- 截至到今天最新的tensorflow-gpu版本v2.15.0,支持CUDA v12.2 + cuDNN v8.9。

- tensorflow-gpu版本v2.14.0,支持CUDA v11.8 + cuDNN v8.7。

综合来看,兼容最新PyTorch/Tensorflow版本的CUDA版本组合是:

- CUDA v11.8 + cuDNN v8.7

1.3 确认CUDA版本与Ubuntu、GCC版本关系

Table 1. Native Linux Distribution Support in CUDA 11.8

| Distribution | Kernel1 | Default GCC | GLIBC |

| x86_64 | |||

| Ubuntu 22.04 LTS | 5.15.0-25 | 11.2.0 | 2.35 |

| Ubuntu 20.04.z (z <= 4) LTS | 5.13.0-30 | 9.3.0 | 2.31 |

| Ubuntu 18.04.z (z <= 6) LTS | 5.4.0-89 | 7.5.0 | 2.27 |

可以看到CUDA11.8在Ubuntu20.04下需要GCC >=9.3.0,符合当前环境要求。

| CUDA Toolkit | Minimum Required Driver Version for CUDA Minor Version Compatibility* | |

|---|---|---|

| Linux x86_64 Driver Version | Windows x86_64 Driver Version | |

| CUDA 11.8.x | >=450.80.02 | >=452.39 |

cat /proc/driver/nvidia/version 可以看到我们安装的驱动是535.154.05,满足CUDA11.8.x的驱动依赖条件。

1.4 确认TensorRT与CUDA版本的关系

查看最新TensorRT版本的NVIDIA TensorRT Installation Guide。经过对比,同时支持 CUDA v11.8 + cuDNN v8.7 的TensorRT最新版本为v8.5.3。

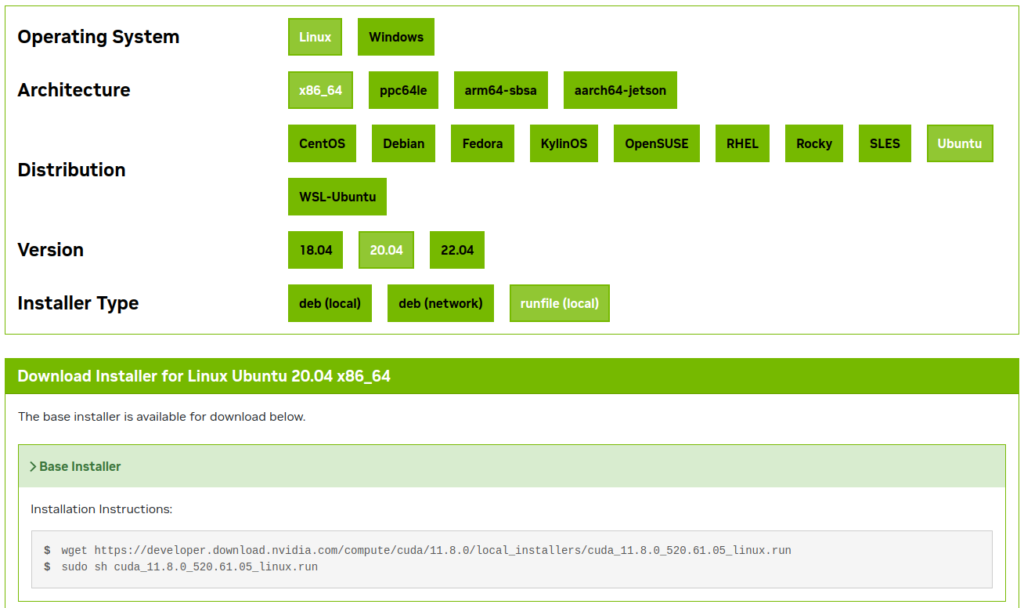

2. 安装CUDA 11.8

从https://developer.nvidia.com/cuda-11-8-0-download-archive 下载cuda-11.18,选择Linux + x86_64 + Ubuntu + 20.04 + runfile(local),

#下载安装cuda11.8 (手工安装,别用sudo apt install nvidia-cuda-toolkit装,版本会很旧)

wget -c https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda_11.8.0_520.61.05_linux.run

chmod +x cuda_11.8.0_520.61.05_linux.run

sudo sh cuda_11.8.0_520.61.05_linux.run

Do you accept the previously read EULA?

accept/decline/quit: accept

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 520.61.05?

(y)es/(n)o/(q)uit: n #前边已经装过显卡驱动了,这里不要重复安装

Install the CUDA 11.8 Toolkit?

(y)es/(n)o/(q)uit: y

Enter Toolkit Location

[ default is /usr/local/cuda-11.8 ]:

Installing the CUDA Toolkit in /usr/local/cuda-11.8 ...

Installing the CUDA Samples in /home/work ...

Copying samples to /home/work/NVIDIA_CUDA-11.8_Samples now...

Finished copying samples.

#查看cuda版本

cd /usr/local/cuda-11.8/bin

./nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Wed_Sep_21_10:33:58_PDT_2022

Cuda compilation tools, release 11.8, V11.8.89

Build cuda_11.8.r11.8/compiler.31833905_0

#添加环境变量

vim ~/.bashrc

export PATH=/usr/local/cuda-11.8/bin:${PATH}

export LD_LIBRARY_PATH=/usr/local/cuda-11.8/lib64:${LD_LIBRARY_PATH}

export CUDA_HOME=/usr/local/cuda-11.8

source ~/.bashrc此时顺便再是下nvidia-smi,看看gpu驱动是否还能正常工作。

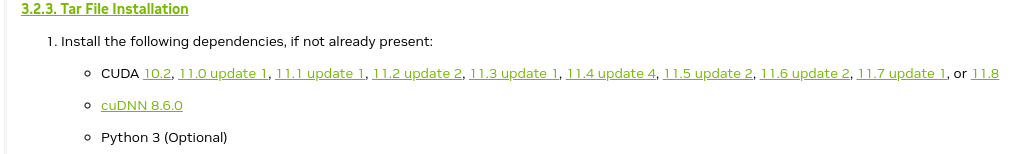

3. 安装cuDNN 8.7

https://developer.nvidia.com/rdp/cudnn-archive

注册账号,下载对应cudnn版本,我选择的 Download cuDNN v8.7.0 (November 28th, 2022), for CUDA 11.x 下的 Local Installer for Linux x86_64 (Tar)

#下载CUDNN

wget -c -O cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz "https://developer.download.nvidia.com/compute/cudnn/secure/8.7.0/local_installers/11.8/cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz?_yhgesiq1TihsP6a_eh8LBpOblhF9j0gWC9-tOYrqmrXtd3VzJ9AhgaKQc3Hrel73ti3r-i4xD1O9bqFfXDB_xBws9P9RrDwr2W5AJQ8_oUCRoy_0h_b0BpdWfCHlOrS0Wez8umHVfC96DN0ltsuxnaD3ZfIcRnqNCjCyh5SzSf06ZdFtqzyYtHLW6Wgxd3jCx1qPhc8cy1D3PChIpdDHnc=&t=eyJscyI6IndlYnNpdGUiLCJsc2QiOiJkZXZlbG9wZXIubnZpZGlhLmNvbS9jdWRhLTExLjgtZG93bmxvYWQtYXJjaGl2ZSJ9"

#解压后的文件夹名称为cuda ,将对应文件复制到 /usr/local中的cuda-11.8目录内

tar xvf cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz

# 复制cudnn头文件和lib库

cd cudnn-linux-x86_64-8.7.0.84_cuda11-archive

sudo cp ./include/* /usr/local/cuda-11.8/include/

sudo cp ./lib/* /usr/local/cuda-11.8/lib64/

4.安装TensorRT 8.5.3

官方说明:Installation Guide

下载地址:NVIDIA TensorRT 8.x Download

安装:

# Unpack

version="8.5.3.1"

installpath=/home/work/tools/gpu/TensorRT-${version}

tar zxvf TensorRT-${version}.Linux.x86_64-gnu.cuda-11.8.cudnn8.6.tar.gz

# add LD_LIBRARY_PATH

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/work/tools/gpu/TensorRT-8.5.3.1/lib

# Python wheel

python3 -m pip install ${installpath}/python/tensorrt-*-cp38-none-linux_x86_64.whl

python3 -m pip install ${installpath}/graphsurgeon/graphsurgeon-0.4.6-py2.py3-none-any.whl

python3 -m pip install ${installpath}/uff/uff-0.6.9-py2.py3-none-any.whl

python3 -m pip install ${installpath}/onnx_graphsurgeon/onnx_graphsurgeon-0.3.12-py2.py3-none-any.whl

# test

cd ${installpath}/samples/python/yolov3_onnx

pip3 install -r requirements.txt

wget https://github.com/pjreddie/darknet/raw/f86901f6177dfc6116360a13cc06ab680e0c86b0/data/dog.jpg

wget https://raw.githubusercontent.com/pjreddie/darknet/f86901f6177dfc6116360a13cc06ab680e0c86b0/cfg/yolov3.cfg

wget -c -O yolov3.weights https://pjreddie.com/media/files/yolov3.weights

python3 yolov3_to_onnx.py4.安装Torch/Tensorflow,验证CUDA是否生效

# pip安装pytorch/tensorflow

sudo apt install python3-pip

pip3 install --upgrade pip

pip3 install torch tensorflow tensorrt

# 编写pytorch/tensorflow测试脚本

vim test_gpu.py

# test torch

import torch

print("Torch CUDA: ", torch.cuda.is_available())

device = torch.device("cuda:0")

print(device)

print(torch.cuda.get_device_name(0))

print(torch.rand(3,3).cuda())

print(torch.rand(5,5).cuda() + torch.rand(5,5).cuda())

# test tensorflow

import tensorflow as tf

print("Tensorflow GPU: ", tf.test.is_gpu_available())

# 测试GPU是否成效

python test_gpu.py

Torch CUDA: True

cuda:0

GeForce RTX 2070

tensor([[0.9530, 0.4746, 0.9819],

[0.7192, 0.9427, 0.6768],

[0.8594, 0.9490, 0.6551]], device='cuda:0')

Created device /device:GPU:0 with 6412 MB memory: -> device: 0, name: NVIDIA GeForce RTX 2070, pci bus id: 0000:01:00.0, compute capability: 7.5

Tensorflow GPU: True

nvidia-smi -l #查看gpu占用进程情况

yan 24.2.1 0:18

参考:

双系统安装Ubantu18.04 + RTX2070 + CUDA10.1 + Cudnn7.5.1+anaconda3+tensorflow-gpu